Should kids have smartphones?

We sell a lot of phones to parents in July and August, just before their kids start secondary school (age 12), but it has become much more common to see kids as young as 10 with iPhones. Up until that point, they're quite happy just having an iPad to play games and watch YouTube on, but when other kids in their class start Snapchatting, it's only natural that they want to join in.

Is this a bad thing?

Smartphones provide quick, easy, "cheap" dopamine, i.e. reward without effort (never good), and this makes them addictive but ultimately unfulfilling. These effects can be mitigated by limiting total exposure and ensuring that they do not displace the activities and behaviours that we know are critical for our health and well-being: exercise, time in nature, and social connection.

A smartphone is about as close to necessary a possession as there is. To not have a smartphone in 2023 is to risk being disconnected from others and failing to develop the technological prowess and relevant soft skills required to function in modern society. At the very least, it'd be damn inconvenient, and this is especially true for younger generations.

In my opinion, the benefits of technology significantly outweigh the costs at an aggregate level, and I believe this to be true in the case of smartphones - for most people in most cases. Mindfully and appropriately used, our smartphones enable us to be more productive, better connected, and less stressed. What constitutes mindful and appropriate usage is debatable and will vary from person to person.

It's far more difficult to make this case for social media - in young people at least.

The average age when signing up for a social media account is 12.6 years.

There's sufficient evidence to suggest that social media platforms are the leading contributor to the current mental health crisis among teens. Reports of anxiety, depression and suicide attempts amongst young people began skyrocketing in the early 2010s, just as social media was becoming increasingly popular and accessible.

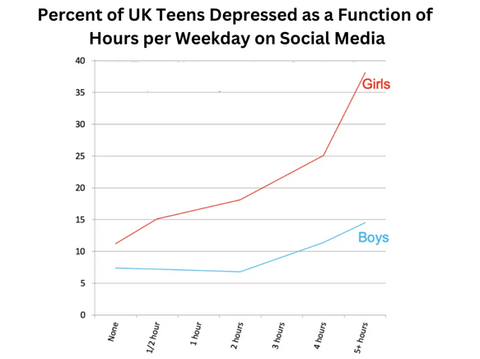

The data continues to prove that social media usage correlates with poorer mental health outcomes and that the magnitude of this effect is notably greater in teens.

Moderate use appears to have little effect whereas heavy use has a disproportionately greater effect. Mood disorders are more prevalent in girls than boys. For boys, depression rate doubles as daily use increases from 2 to 5 hours. For girls, it triples.

Would you let your child vape?

Perhaps it was to be expected that your teenager would sneak a cigarette or a beer when they get the chance, and that this was seldom cause for concern. By contrast, the notion of carrying and hitting a vape all day every day should be far more alarming to a parent. But what if we are glossing over a device that is far more addictive and destructive than a vape?

Our prefrontal cortex - the part of the brain associated with complex decision-making and impulse control - isn't fully developed until around 25. This is why we restrict freedom of choice by law and as parents until the age of 18.

It's so obvious that children shouldn't have unlimited access to drugs, pornography and gambling.

Can we really expect a child to exercise appropriate caution with respect to social media use?

In my experience and observation, practising healthy habits and moderation is difficult enough as an adult, so it'd be unfair to expect a child to make informed and responsible decisions for themselves, especially when you pile peer pressure on top of the issue: no teen wants to be the one weird kid without it, and no adult wants to be the one out-of-touch, overly-strict hippy parent that doesn't allow it.

I believe that an adult should be able to do whatever they want - within reason and provided it doesn't cause harm to others. At what age we draw the line and for what choices are open to debate, but I think that based on the mountains of data and anecdotes, there should be strict age and/or usage limits on social media use specifically. Not doing so is setting up a non-trivial proportion of young people for failure.

I think that smartphones (and computers) are essential items for just about everyone including teens, and whilst they have their dangers and downsides, we can mitigate them with appropriate supervision and education, and this should be the role of parents and teachers.

You remember how "growing up" was at times really difficult and disconcerting. Technology has only made it more challenging, which is why we should sympathise with the youth of today, even though we might not understand them. Younger generations are the first to grow up with an iPad. We don't know exactly what effect this has, but it's clearly a significant one - one that has the potential to damage malleable minds, which could lead to greater societal problems.

It's important to note that these issues relate to internet access and that the smartphone is just a vessel for this.

Smartphones and social media are not synonymous. You cannot blame the technology for ruining a generation. It's how we use it that matters. For the most part, we are all subject to the same benefits, challenges and risks, regardless of age. Social media is an outlier because it has a disproportionately harsher and wider effect on young people.

See Our Blog for the latest industry news, tech tips, company updates, and anything else we feel like writing about.